How Enterprise CIOs Build & Buy Gen AI In 2025 | In The Loop Episode 21

.png)

Published by

Published on

Read time

Category

Today, I want to deep-dive into an incredible piece of research from the famous VC firm A16Z, created by the legendary entrepreneurs Marc Andreessen and Ben Horowitz. This research gives us potentially the best insight into how things have changed in the enterprise space, those with the big budgets for AI products.

In today's In The Loop episode, I will discuss three things I learned from reading their paper. The research was based on interviews and surveys with over 100 Chief Information Officers across 15 industries. These are the people who buy big AI products for their companies, and the report shows how things have changed when it comes to AI purchasing.

As you'll see, one thing is for certain: the scale and speed of this adoption within enterprises are remarkable. If you're building or selling AI, working at a big company, or just trying to figure out how the world of AI is changing, you'll want to listen to the research findings.

This is In The Loop with Jack Houghton. I hope you enjoy the show.

Building versus buying AI products

Let’s dive into a16z’s report, How 100 Enterprise CIOs Are Building and Buying Gen AI in 2025 by Sarah Wang, Shangda Xu, Justin Kahl, and Tugce Erten. All the graphs you see in this article are sourced from this report.

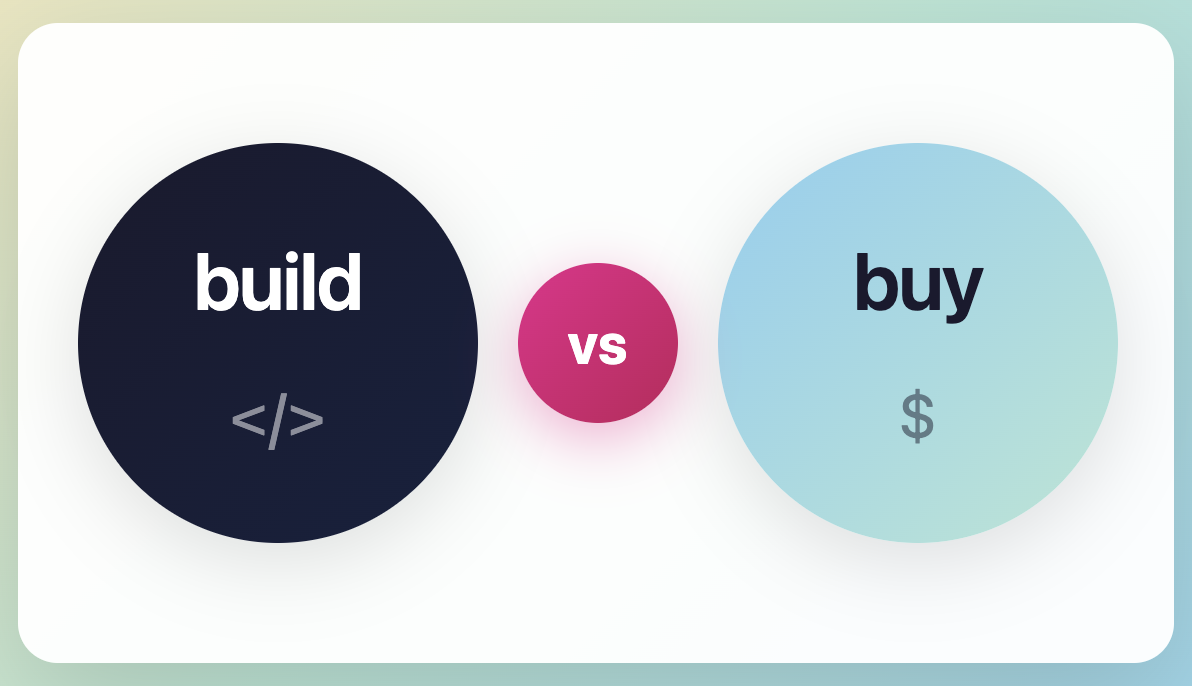

I'll start with something that particularly resonated with me. Two years ago, if you talked to big companies about AI, the conversation was about building AI solutions internally. They all have IT teams with developers, and leaders thought they needed to build their own AI capabilities—they couldn't rely on third parties for something strategic, or they wanted to maintain control over their AI destiny.

But the conversation has completely changed. The research found that 90% of enterprises are now testing third-party applications for things like customer support. What's striking is that customer support was one area where companies started building internal solutions because the use case seemed clear and the ROI was obvious. Yet nine out of ten companies are now looking to buy rather than build.

This makes sense. Previously, there weren't ready-made solutions to buy. People were piecing together different pieces of technology and infrastructure to create solutions. Companies like Mindset AI have provided all that infrastructure inside an integrated AI development platform, making it simple for companies to build new features. A couple of years ago, this didn't exist. People had to figure out which parts of the technology to pick, how to glue it together, and what to build on top.

The research shows that in financial services, over 75% of customer support implementations now use third-party applications. Even in manufacturing—traditionally a conservative industry due to compliance—about 60% are buying AI applications.

The research cited a fintech company discussing this build versus buy dilemma. This company had a dedicated team making good progress and said they were about 60% done. But when new solutions appeared, they did a competitive analysis and decided to buy instead of build. The AI-native applications they were buying were just better.

This is evident with AI-native applications today. Some CTOs say 90% of their code is AI-generated from platforms like Cursor or Claude. That's a crazy number. Just 12 months ago, that was about 10-15% of AI-generated code. Going from one in ten lines of code to nine in ten lines in just 12 months is remarkable.

When researchers asked enterprise CIOs why they preferred AI-native applications, they said it was because they were faster to innovate, and building from the ground up around AI creates a fundamentally better experience. It's like the early days of cloud computing, where every enterprise tried to build their own cloud infrastructure, then AWS and other providers came along and did it much better. It became ridiculous to build your own. We're seeing the same pattern with AI applications at the enterprise level.

If you're a technology vendor or SaaS provider right now, you need to move fast because enterprises want to buy best-in-class solutions. The data on user satisfaction backs this up. The research found that AI-native solutions average an NPS score 40 to 50 points higher than traditional SaaS companies. That's huge.

Mindset AI is a development platform that helps SaaS vendors become AI-native technology providers. We're seeing these trends play out in real time because we allow product management and dev teams to build customer-facing AI agents and features on our platform and plug them into their products.

What we're seeing now is people retrofitting chatbots into their products, but an AI-native technology provider has everything controlled by AI, by natural language. You tell the application what to do, and it does it. Over time, all interfaces will melt away because people just want to have a conversation to get results. That's where all technology providers are moving towards. I'm always ambitious and probably overly optimistic, so I’d say we’ll see this happening in 18 months—in reality, this journey will go on for many years.

The build versus buy dilemma is ending, and enterprises are looking to prioritise buying—driven by the improved quality of AI-native platforms and products.

How much do enterprises spend on AI?

Let's move to another key thread from the research: the huge budget explosion. With new technologies, you typically see gradual adoption—pilot programs, small starts, then slow budget growth as technology becomes useful and accepted.

Last year, Wall Street was asking whether AI would ever be helpful or accepted by large enterprises on a large scale outside of pilots. Those predictions have been wildly false because AI has broken all the rules.

The research found that enterprise AI budgets have grown 75% beyond what companies projected they would spend, and these were already aggressive projections. What's crazier is that enterprise leaders predict another 75% growth over the next year. To put that in context, typical enterprise software budgets increase maybe 10-15% annually. This is massive spend and growth in the AI sector.

The numbers tell only part of the story. What's interesting is where the money's coming from. Last year, all money came from innovation budgets—basically R&D experiments—making up about 25% of total AI spending. This year, that's dropped to 7%. AI has graduated from experimental technology to core business strategy. Companies aren't funding this from R&D budgets anymore.

According to the research, 77% now comes from IT budgets, 16% from business units, and only 7% from innovation funds. This shift has huge implications most people don't appreciate yet. When spending innovation budgets, you can experiment, pilot things, make mistakes, and try crazy ideas. But when you're spending core IT budgets, you need reliability, proven ROI, and vendors who can deliver at enterprise scale.

This is exactly why the budget shift is driving the build versus buy reversal. With innovation budgets, you could justify building internally because learning was part of the value. With core IT budgets, you need solutions that work today.

The research highlighted two reasons behind this budget explosion:

- Enterprises are discovering loads of new use cases: internal enterprise search, data analysis, document processing—seeing 60-80% adoption rates across companies. That's huge. Think of when new HR software is introduced: how many people ever log into those things?

- Customer-facing AI use cases are emerging: one large technology provider interviewed said they've been focused on internal use cases, but now they're shifting budget and effort into customer-facing applications.

This is exactly what we’re seeing at Mindset AI. We enable technology companies to build customer-facing AI agents and features, and we're seeing agentic experiences and AI automations happening inside technology providers' platforms at scale. It's magical and difficult to achieve. One of our customers launched 20 pages of customer-facing use cases.

What's interesting here is that internal use cases have limits—you can only automate so many internal processes. When it comes to customer-facing applications, there are almost unlimited possibilities. This trend is a good reminder for providers who make money based on application usage.

LLM consolidation isn’t happening

Let's move to the third and final key trend from this research: how wrong most of us were about large language model providers. This is where I was personally wrong—I predicted something and now have egg on my face.

Conventional wisdom says that as technologies mature, they consolidate. Enterprises pick a winner, big companies buy smaller ones, everyone standardizes on typical platforms, and costs get driven down through volume. The LLM market is doing the exact opposite.

Last year, it felt like the large language model space was converging and becoming similar. How could you tell which was better, or why use this one versus that one? Everyone assumed the space was becoming commoditized, only getting cheaper with no differentiation between models. We were all wrong.

Now 37% of enterprises are using five or more models in production—not testing, not pilots, but full production. That's up from 29% in just the last 12 months. When you look at experimentation, that number jumps to 52% testing over five models.

You might think this is because companies don't want to be locked into a single large language model—enterprises have learned that putting all your eggs in one basket with a single technology provider is dangerous. But the research showed this isn't the case. The main driver is optimizing for performance based on specific use cases.

For those who listen to this podcast weekly, I've covered how certain LLMs have specialized in specific use cases and done incredibly well. What this research shows is that most of us missed a key factor: the enterprise large language model provider layer has not been commoditized. Instead, it's specialized—the exact opposite of what everyone thought would happen 12 months ago.

Some examples from the research: Anthropic models excel at coding tasks. What's interesting is the nuanced specialization even within coding tasks. Claude performs better on detailed, fine-grained coding tasks, while Gemini is better at high-level system design and architecture. Even within a use case, models are specializing.

However, when it comes to dominance, OpenAI leads everything. They have overall market share leadership—67% of OpenAI users have deployed non-frontier models in production, compared to just 40% for Google and only 27% for others. This shows that even though many criticized the number of language models OpenAI has, it's worked well. I don't know how long that will last.

Google is rising quickly, especially with big companies over 10,000 employees. Part of that is their relationships with big enterprises and the cost. For example, Gemini 2.5 costs 26 cents per million tokens, while GPT-4 costs 70 cents. Google is three times cheaper, which could become a major factor for big companies.

But the challenge is that switching costs are getting higher. Configurations that organize different prompts and tell LLMs how to behave in certain circumstances, plus integrations and orchestration tools. As big enterprises build more sophisticated agentic workflows, it becomes very difficult to switch between models.

The research shows 68% of enterprises now find it either difficult or very difficult to switch models, up from 43% last year. A CIO quoted said, "All of our prompts and systems have been built around OpenAI. Each one has a specific set of instructions and details, so it's a very big task to swap that out."

This creates a fascinating dynamic. The multi-model approach gives enterprises flexibility and optimization in the short term, but as individual workflows become more complex, switching becomes more expensive. If I'm a large language model provider, having lots of different models with strong performance in specific tasks will do well long term.

What does this all mean for tech vendors?

These are fascinating themes. If I step back and think about what this means for business leaders, technology leaders, and people in this space, I think first and foremost, for those building technology, this is the best time in the world to be building technology.

The build versus buy shift signals that the application layer is maturing fast. We're moving into a world where the best products are starting to win. This follows the exact same pattern as cloud computing or mobile app development—pretty much every major technology shift. But the speed is something we've never seen before. These transitions usually take five to seven years, but this is happening in 18 months.

Second, this budget explosion tells us that AI isn't a bubble or fad—enterprises spend their core budgets on AI applications. If you're in this space, you can take massive leaps forward. But the challenge is that you must have a genuinely best-in-class product to win.

If you're in the space, I hope this research acts as another kick to move faster. Thanks for today. Hope you found that helpful, and I'll see you next week.

Book a demo today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpeg)