11 AI Predictions That Will Shape Product Development In 2026

Published by

Published on

Read time

Category

We're at the start of 2026, and something fundamental has shifted in how companies think about AI. It's not the hype, that's still there, but where the actual pressure is coming from has completely changed.

Only 39% of organizations report bottom-line EBIT impact from AI at the enterprise level, according to McKinsey's 2025 State of AI report. That gap between investment and measurable return is reshaping every conversation happening in boardrooms right now. In 2024, boards asked, “Should we experiment with AI?” In 2025, the question evolved to “How do we deploy AI?” Now in 2026, it's brutally simple: “Can you prove this is working?”

CFOs are demanding spreadsheets, not vision decks or strategic frameworks. They want actual data on what's working, what isn't, and where the money is going. The pressure to demonstrate real business outcomes has never been more intense.

At Mindset AI, we've spent the past year watching companies succeed or struggle with AI implementation. Here's what we've learned: the winners aren't the ones with the most sophisticated models or the biggest AI teams. They're the ones who figured out where to focus their engineering capacity, how to measure what actually matters, and how to build systems that solve business problems rather than just demo well.

These 11 predictions aren't about what's technically possible in some distant future. These are the trends that will separate winners from laggards in 2026, based on what's actually happening right now in product teams around the world.

AI prediction 1: The frontend no longer differentiates

Every company building AI products is converging on the same interface pattern. You type naturally, AI responds, and interactive elements appear when needed. If you've used one conversational AI interface, you've essentially used them all. Amazon's ad management agent looks remarkably similar to Yahoo's, which looks like Google's.

Consider this: More than 1 billion people use AI interfaces monthly. ChatGPT, Claude, Gemini, and Perplexity have trained an entire generation on how to talk to AI. That expectation has transferred everywhere users encounter AI products. The conversational interface itself creates zero competitive advantage anymore.

The strategic implication? Stop investing engineering time building conversational interfaces that your competitors are also building. Those resources could be driving real differentiation elsewhere in your product.

AI prediction 2: Engineering capacity shifts to the intelligence layer

Here's the strategic decision happening right now across product teams: companies are choosing to stop building infrastructure that everyone needs identically and redirecting engineering effort towards capabilities that make them unique.

Think about what engineering teams actually spend time on today. Months go into building conversational interfaces that every competitor is also building. Weeks disappear into rendering systems that every company needs in exactly the same way. Meanwhile, the things that actually create value (intelligence about the problem domain, algorithms that work better than alternatives, unique ways of solving customer problems) get a fraction of engineering attention.

If you're building sales software, your real advantage isn't in having a chat interface. It's in predicting which deals will close based on patterns your competitors don't see. If you're building logistics software, it's knowing where delays will happen before they occur. This middle layer, the intelligence built on proprietary data and domain expertise, is where competitive advantage actually lives.

The path forward is clear: rent commodity infrastructure and redirect 100% of engineering capacity to building intelligence that makes your product uniquely valuable. That's where you'll win in the market.

Want to see what this intelligence-first approach looks like?

AI prediction 3: Conversations become visual and interactive

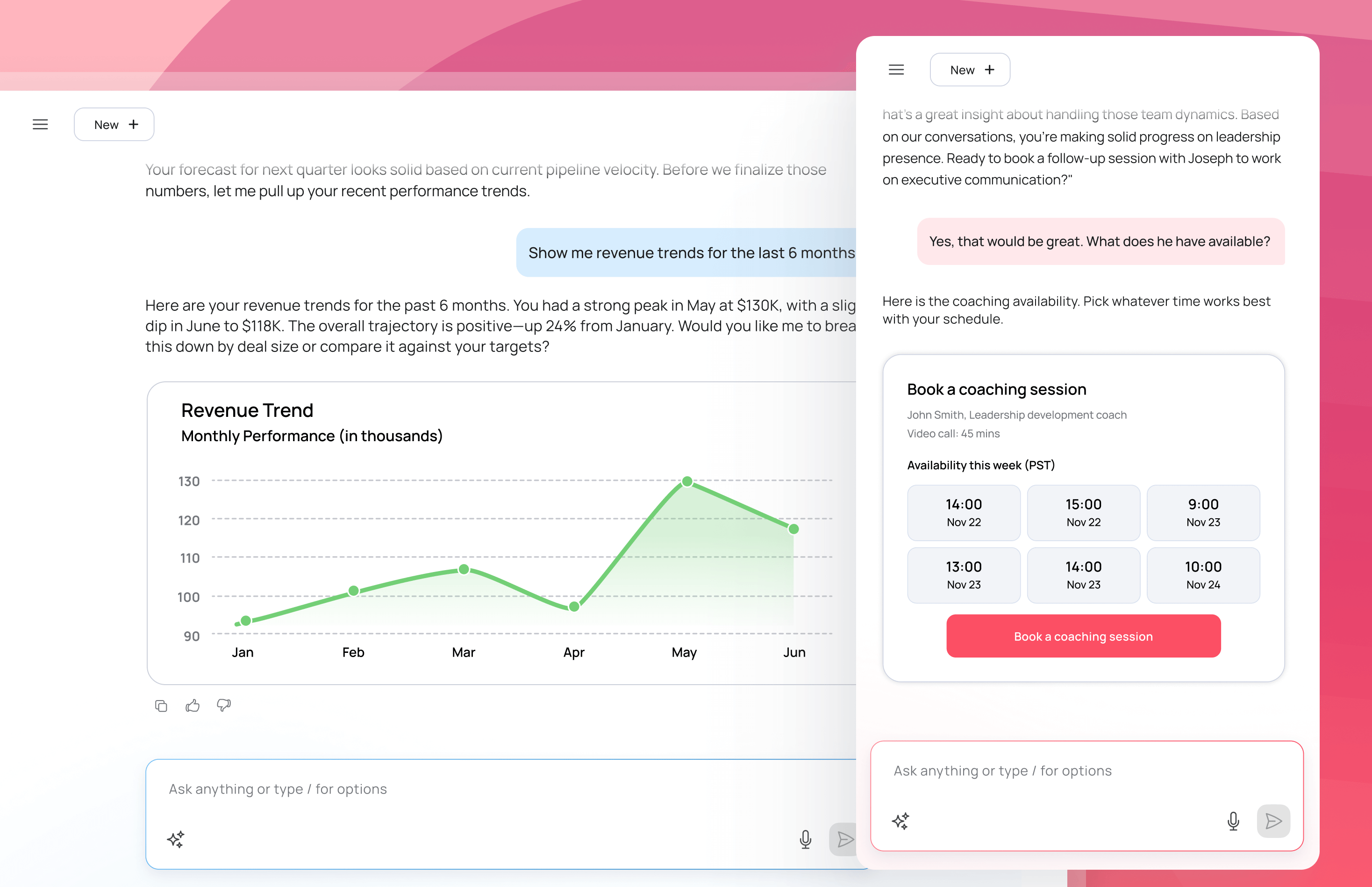

Text-only AI worked fine for simple questions, but it breaks down fast when complexity enters the picture. Spotify, Expedia, and Instacart now have full interfaces appearing inside ChatGPT - not links taking you elsewhere, but actual apps that are functional and interactive, rendering mid-conversation.

Think about booking flights through text descriptions of options. It's painful compared to seeing actual flight cards with prices, times, and availability. Analyzing data through text descriptions doesn't compare to actual charts you can filter and explore. Claude launched the same capability recently, where services define interface components that render inside conversations. Ask about meeting times, get an interactive calendar widget. Ask about sales trends, get a chart you can manipulate.

Text-only AI responses will feel as outdated as DOS commands by year-end. The winners will be building visual, interactive components that make complex information actually usable.

AI prediction 4: Agent reasoning replaces rigid workflows

Most companies default to workflows because they feel controllable. You can drag boxes, connect arrows, define if-then logic, and literally see what will happen. But real-world tasks rarely fit into predetermined steps the way workflow builders assume they will.

Take a customer support ticket where someone reports three issues: a duplicate charge, a failed cancellation, and an invalid payment method, all on the same ticket. Workflow approaches try to handle each issue sequentially, one after another. But what if the “duplicate” charge was actually an upgrade? What if the cancellation failed because there's a pending charge? What if the payment shows as invalid because there's a dispute filed?

These issues are interconnected in ways that workflows can't handle elegantly. Agent reasoning assesses the full situation differently. It recognizes that three issues were reported, understands they're related, sees that the card dispute is causing the payment error, recognizes the pending upgrade is blocking cancellation, and addresses the root cause (the card dispute), which resolves everything.

Here's what surprises most teams: with proper testing infrastructure in place, agent reasoning is actually more predictable in production than rigid workflows. Stop defaulting to Zapier-style workflows for complex tasks and embrace agent reasoning with comprehensive testing.

AI prediction 5: Agent building becomes conversational

Right now, building agents requires technical skills that most domain experts don't have. Tools like Zapier and similar platforms require thinking like a programmer—understanding variables, data types, conditional logic, and error handling. Even "low-code" tools still demand a certain technical mindset that creates a barrier.

What's emerging changes that dynamic entirely. You describe what the agent should do in plain language, and the system builds the configuration. “I need an agent that monitors support tickets, figures out which ones are urgent, and routes them to the right person. If a ticket sits for more than 24 hours, escalate it to a manager.” That's it. The system translates that description into working logic.

This shift expands who can build agents from technically skilled knowledge workers to anyone who can clearly describe what needs to happen. The people who best understand what agents should do have always been domain experts- support managers who know exactly what routing logic their teams need, operations directors who understand which processes bottleneck and why, finance analysts who can articulate what makes a transaction suspicious.

These experts can already describe needs in extraordinary detail, including nuances, edge cases, and priorities. They just couldn't build it in existing tools. That barrier is disappearing. Domain experts will build agents directly, without submitting engineering tickets and waiting for capacity.

AI prediction 6: Testing becomes the confidence layer

The problem with deploying agents isn't capability, it's confidence. Klarna deployed agents that cut customer support resolution time by 80%. They got there through comprehensive testing that built confidence across thousands of scenarios.

Traditional software testing doesn't work because agent behavior isn't deterministic. The answer is scenario-based testing at scale, using AI to evaluate AI. Build a comprehensive testing infrastructure now. You can't prove ROI without measurement, and you can't measure what wasn't tracked from the beginning.

We'll be demonstrating real testing frameworks and measurement strategies in our February 19th webinar. See how to build the confidence layer your team needs to deploy agents at scale.

AI prediction 7: Knowledge work shifts from doing to managing

In early January, Anthropic launched Cowork. You give it access to a folder, describe what needs doing, and it handles the rest. It makes a plan, executes, and delivers finished work. Financial reports that took three hours now complete in 20 minutes.

GPT-5.2, launched in December 2025 and optimized for professional knowledge work, beats or ties top professionals on 71% of evaluations across 44 occupations. It produces outputs 11 times faster at less than 1% the cost.

Knowledge work is shifting from doing tasks to managing agents. Instead of writing copy, you're evaluating AI-generated copy. Instead of building models cell by cell, you're directing agents and validating logic. Instead of manually researching, you're reviewing AI synthesis.

Skills that matter are transforming from execution speed to judgment quality, from doing to directing. AI is expected to improve employee productivity, but that improvement only materializes when organizations reorganize around managing outcomes rather than doing specialized tasks.

AI prediction 8: 2026 is the year of AI agent managers

The pathway to AI agents runs through agent management, not full autonomy. You direct AI agents, check progress, and make strategic decisions, but agents handle execution. People are delegating complex tasks:

- “Turn this outline into a presentation.”

- “Analyze sales data and identify trends,”

- “Research competitor pricing.”

AI agents handle research, analysis, and generation, while humans review, direct, validate, and make final decisions. This preserves human judgment in the loop. You're not trusting autonomous agents with critical decisions, but you're also not doing all the execution work. Over time, some managed tasks will transition to full autonomy. That's the pathway, through managed delegation that builds trust.

AI prediction 9: Domain experts become builders

There's a new role emerging: people in departments who combine domain expertise with AI-building capability. Not professional engineers, but domain experts who can build solutions to their own problems. Companies are hiring people that didn't exist 18 months ago, people with functional domain experience plus the ability to build with AI tools. These people are helping departments access building capabilities they couldn't use before. Marketing ops people are describing campaign dashboards and seeing them built. Success managers are creating custom views without submitting IT tickets. Finance analysts are building specialized reports. They're not professional developers, they're domain experts who learnt to describe clearly and iterate.

Hire for domain expertise plus AI-building capability. The best solutions come from people closest to the problems.

AI prediction 10: Memory becomes a competitive moat

Memory is becoming a competitive moat. Claude launched memory late 2025; ChatGPT has had it for over a year. It's already a major barrier preventing model switching. If Gemini releases a better model tomorrow, you'd probably stick with Claude for strategic work because recreating all that context would take forever.

But here's where this is really going: it's not just conversation memory. Real competitive advantage comes from aggregating intelligence across every touchpoint. What documents were referenced, what features were used and when, what patterns emerged in work habits, and what format preferences exist.

For example, if you're building sales software, memory contains insights about specific customer patterns: buying behaviour, decision processes, and past interactions. That's proprietary data competitors can't access. Memory infrastructure is becoming a commodity. What creates advantage is the quality and uniqueness of data flowing into those systems.

AI prediction 11: ROI measurement shifts from vibes to data

2026 is when AI measurement shifts decisively from vibes to hard data. The McKinsey research showing that only 39% of organizations report bottom-line EBIT impact from AI often reflects inadequate measurement rather than inadequate technology. Most companies operate on anecdotal evidence: “The team feels more productive.”

The pressure is real: 61% of senior business leaders feel significantly more pressure to prove ROI compared to a year ago. Companies that didn't build monitoring from day one will struggle to prove ROI retroactively. The organizations demonstrating clear AI value share one thing in common: they instrumented their systems from deployment, not after stakeholders started asking questions.

Build observability from the start. By 2027, CFOs will expect measurement from day one as table stakes.

What this all means for product leaders

If you're a product leader or CTO, these aren't just trends to watch; they're warnings about where you need to be proactive in Q1 2026.

The critical decisions: where you're investing engineering capacity, how you're building confidence in agent systems, and whether you're capturing data to prove value. Companies still building commodity infrastructure while competitors focus on intelligence are falling behind. Teams defaulting to rigid workflows are discovering that agent reasoning delivers better results. Organizations without monitoring infrastructure won't prove ROI six months from now.

We're only weeks into 2026. Organizations that act in Q1 will compound their advantages throughout the year.

Book a demo today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpeg)