Why Your AI Agents Need Visual Intelligence (Not Just Text Responses)

Published by

Published on

Read time

Category

Your AI agent can query databases, process transactions, and handle complex workflows. But when it tries to explain a competency gap analysis across 2,000 employees in plain text, users can get lost. When it describes flight options in paragraphs instead of showing interactive cards, booking becomes painful. When it explains quarterly sales trends through bullet points instead of charts, the patterns disappear.

Text-only AI responses work fine for simple questions. But they break down completely when complexity enters the picture. The gap between what your agent knows and what users can understand comes down to one thing: visual intelligence.

Most AI agents still respond with walls of text. But users expect more than that now; they expect visual, interactive interfaces that make complex information actually usable. If your product's agent isn't rendering rich UI inside conversations, there's a gap between what you're shipping and what your users already take for granted.

The text-only AI agent limitation holding you back

Think about how you actually work with information. When analyzing quarterly performance, you do not want someone describing trends in paragraphs. You want to see the chart, filter specific segments, zoom into time periods that matter, and compare metrics visually. When booking travel, you do not want text descriptions of flight options. You want cards showing prices, times, and connections side by side.

Now consider what happens when your AI agent handles these same tasks using only text. The agent queries your database perfectly, retrieves exactly the right information, and processes everything intelligently but then it tries communicating those results through paragraphs and bullet points.

Users read three paragraphs describing data patterns and get confused. They ask follow-up questions, trying to understand what the agent already found. Back-and-forth exchanges waste time. Eventually, they either give up or export the data to build their own visualization. Your agent did everything right except the only part users actually see.

Research from the Nielsen Norman Group confirms this pattern. Their usability studies on AI chatbots found that users “almost always engage in multi-step iteration because the AI doesn’t deliver exactly what the user wants,” and that a text-only interface makes this iteration painful.

This is not an intelligence problem or a capability problem; it’s a fundamental limitation of text as a medium for complex information. Research shows that people digest and retain 65% of visual information after three days, compared to only 10-20% of written or spoken information. Some data simply cannot be communicated effectively through words alone, regardless of how well-written those words are.

What users expect from AI agents

User expectations have fundamentally shifted. More than 1 billion people now use AI interfaces like ChatGPT, Claude, and Gemini monthly. These platforms trained an entire generation on what is possible when you combine conversational AI with visual interfaces.

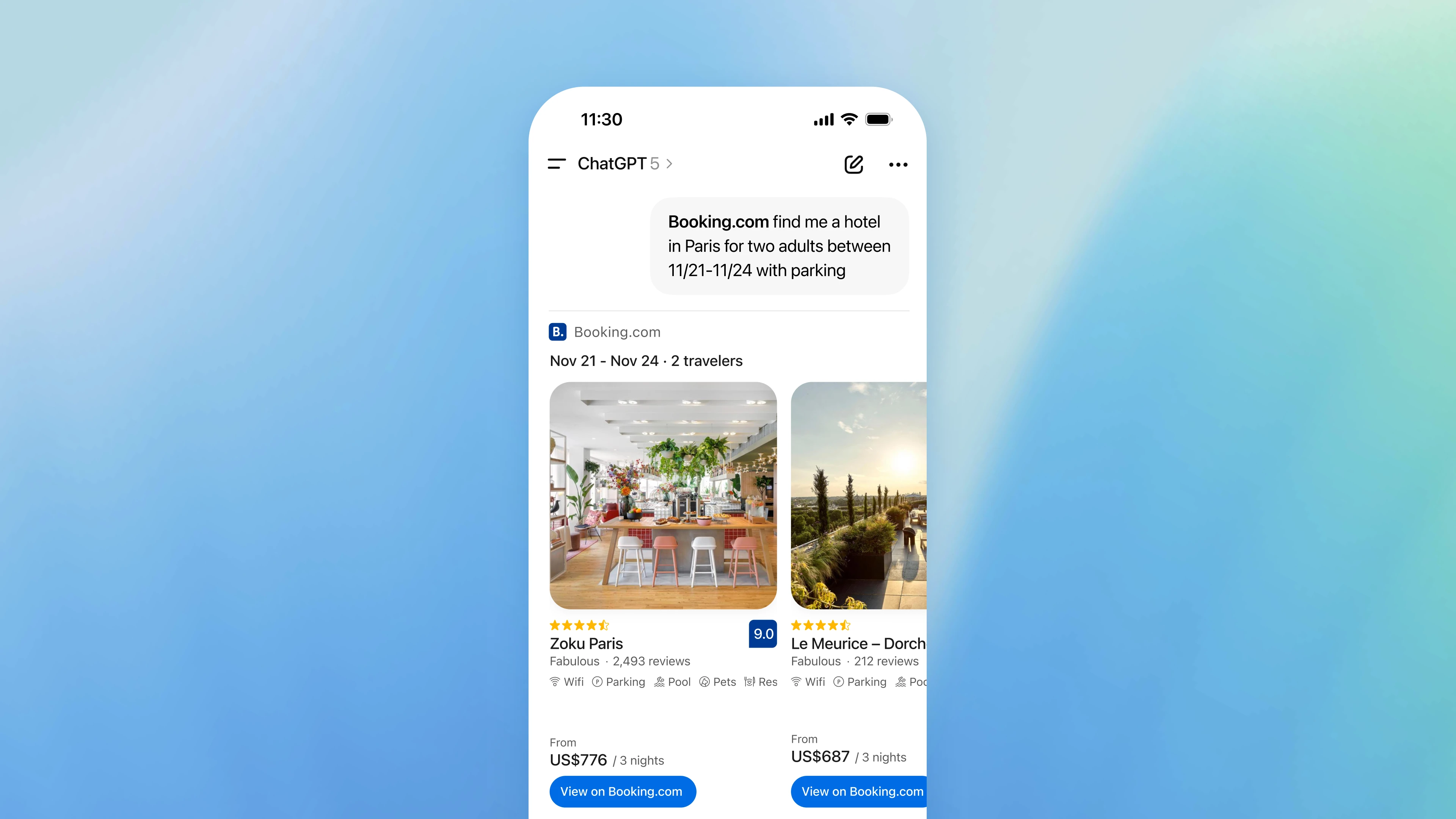

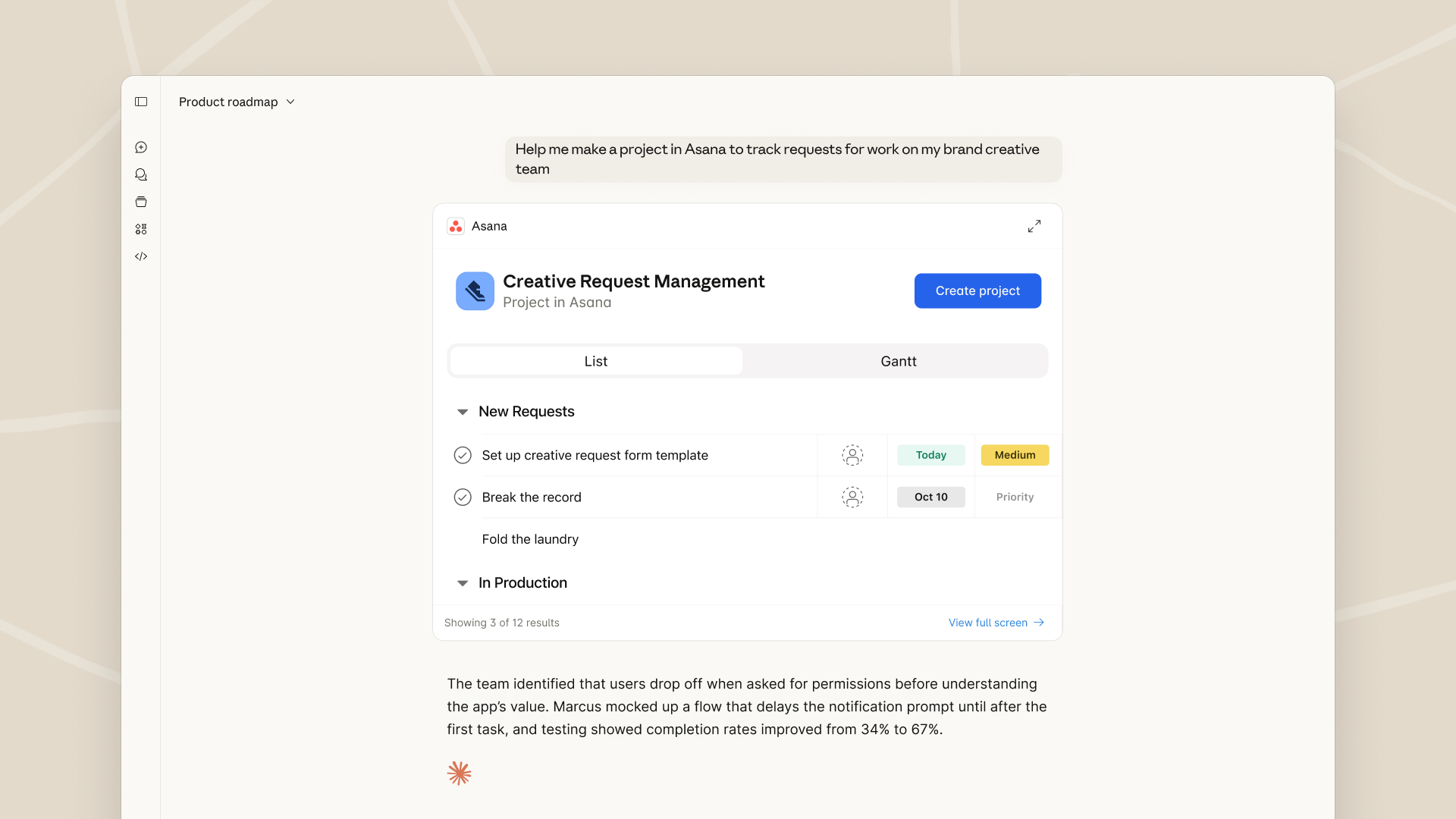

Look at what’s already happening in the market. At OpenAI’s DevDay in October 2025, the company launched a new Apps SDK that enables fully interactive applications to run directly within ChatGPT conversations. Spotify, Expedia, Figma and Instacart now have full interactive interfaces appearing inside ChatGPT. These are not links that take you elsewhere; they’re actual functional apps that render mid-conversation. Ask about flights, and you see interactive booking cards with filters and price comparisons. Ask about music, and you get a playable interface right there in the chat.

Anthropic launched similar capabilities recently. Services define interface components that render during conversations. Request meeting times, and an interactive calendar widget appears. Ask about sales trends, and you get charts you can manipulate. The interface adapts to what you need, exactly when you need it.

This expectation now transfers to every AI product users encounter. When your agent responds with text while competitors show interactive widgets, users notice immediately. They have had a better experience and expect your product to match that standard. Every major platform is essentially building an agent SDK with visual intelligence baked in.

Where visual AI agent UI makes the biggest difference

Visual intelligence transforms how users interact with your product in specific, measurable ways. The impact shows up in task completion speed and user confidence in results.

Data analysis becomes dramatically more effective. Again, this goes back to psychology; The human brain processes visuals 60,000 times faster than text. A user asking about quarterly performance does not want paragraphs explaining that revenue increased 15% while costs rose 8%. They want to see a chart where metric relationships are immediately clear, time-based patterns jump out, and they can drill into segments that interest them. Visual representation makes patterns obvious that would be completely invisible in text.

Complex comparisons see similar gains. When evaluating candidates for a role, reading paragraph descriptions of qualifications is tedious and makes meaningful comparison nearly impossible. A side-by-side comparison widget (where skills, experience, and qualifications are laid out in parallel) lets users evaluate options quickly and with confidence.

But this isn't just about data and visualization. Multi-step processes also streamline through interactive forms. Instead of going back and forth through multiple text exchanges to book a meeting, users see a calendar widget where they can select times, view availability, and complete the booking in a single interaction. The agent handles the backend logic while the visual interface makes the process feel natural.

And status tracking becomes far clearer with visual dashboards. When checking project progress or pipeline health, users want status boards that update in real time, not text summaries they have to request repeatedly. Visual intelligence means agents surface the right widget showing the current state at a glance.

Why most teams struggle building an agent SDK in-house

Here is where most product teams hit a wall. Building visual intelligence for AI agents is not straightforward. The work multiplies in ways that are not obvious at first.

You need to build each widget individually. That competency gap visualization requires React code for the chart component, filtering logic, drill-down interactions, and data formatting. Then you need a calendar widget for scheduling, comparison tables for evaluation, and maybe even pipeline dashboards. Each one requires frontend development, design review, accessibility testing, and ongoing maintenance.

And on top of that, every widget needs to work across multiple surfaces. Your users are not just on your web app. They’re in Slack, Teams, mobile apps, and other channels. Each widget you build needs to render correctly and function properly everywhere. The engineering work compounds with each deployment target. If you’re exploring how to integrate AI into your product, this is often where teams underestimate the scope.

Brand consistency becomes its own challenge. Each widget needs to match your design system, use your colors and fonts, and feel like part of your product rather than a generic add-on. When five different developers build widgets, maintaining consistency requires constant design review and often significant rework.

This is why many teams delay implementing visual intelligence. The work feels overwhelming, engineering capacity is limited, and text-only responses look ‘good enough’ for now.

Build the intelligence, not the interface

If your team is building AI agents with visual capabilities, there's a strategic question worth confronting early: does building that infrastructure yourself actually create competitive advantage?

Consider this: every company building AI agents needs identical visual infrastructure. Calendar widgets work the same whether they are in sales software, HR platforms, or logistics tools. Chart components follow identical interaction patterns regardless of industry. Form builders solve the same problems everywhere. Implementation details vary slightly, but the fundamental capabilities of any agent SDK (widget rendering, multi-surface deployment, brand theming) are commodities.

What actually differentiates your product is not having a calendar widget. It’s the intelligence behind how your agent uses that calendar: understanding user scheduling preferences, recognizing conflicts, and suggesting optimal times based on patterns competitors don't see. The widget is just the interface. Competitive advantage lives in the domain logic and proprietary intelligence that makes your agent smarter than alternatives.

If you’re building sales software, your differentiation isn't rendering pipeline dashboards; it’s predicting which deals will close based on signals competitors miss. Building HR platforms? It’s not the competency gap visualization but rather the analysis that identifies those gaps more accurately than any other tool. Logistics software? It’s not the map widget, but knowing where delays will happen before they occur.

Engineering capacity is not infinite. Every hour building widget infrastructure is an hour not spent building intelligence that makes your product uniquely valuable. Every sprint on multi-surface deployment is a sprint not spent on domain expertise creating competitive distance.

How teams are shipping visual AI faster with an agentic frontend platform

The teams shipping visual AI agents fastest have figured out something important: they don’t need to build everything themselves. They’re making strategic decisions about which layers to build and which to leverage from platforms specializing in this infrastructure.

They treat visual intelligence infrastructure like cloud hosting. Nobody builds data centers anymore because AWS and Azure provide infrastructure better and more reliably than individual companies could. The same logic applies to agentic frontends, the complete frontend layer for AI agents. A purpose-built agent SDK handles conversational interfaces, widget rendering, multi-surface deployment, and brand consistency — infrastructure every company needs in exactly the same way.

Instead of spending months building widget libraries, these teams describe what they need and generate production-ready React code matching their design system. Instead of manually handling multi-surface deployment, they use platforms that handle complexity automatically. Instead of building brand management systems from scratch, they configure colors, fonts, and component libraries once, and everything stays consistent.

This approach redirects 100% of engineering capacity toward the intelligence layer that actually differentiates their product. Instead of debugging widget rendering issues, developers build better prediction models. Instead of maintaining component libraries, designers optimize user experiences based on real usage patterns. Instead of coordinating multi-surface deployments, product managers ship features that create customer value.

Making the decision that accelerates your product roadmap

If you are leading product or engineering, this decision is in front of you now. Research shows that people remember 80% of what they see compared to only 20% of what they read. Users expect visual, interactive AI experiences. The question is how to deliver that capability without derailing your roadmap or fragmenting engineering capacity across infrastructure work.

What differentiates your product is intelligence that determines when to use which widget, domain logic that makes your agent smarter than alternatives, and proprietary insights that solve customer problems better than any other solution. That is where engineering capacity should concentrate.

While you may be spending quarters on widget libraries and multi-surface deployment, competitors are learning from users, iterating on intelligence, and capturing market share. While you debug brand consistency issues, they ship their third generation of AI features based on real feedback.

The path forward is straightforward: leverage an agentic frontend platform for infrastructure everyone needs identically, and build intelligence, making your product uniquely valuable.

By year-end, text-only AI responses will feel as outdated as DOS commands. The winners will be teams that made strategic decisions about where to build and where to leverage platforms shipping visual intelligence users actually want.

Book a demo today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpeg)