Why Building Your Own Agentic Frontend Takes Longer Than You Think (And Why That Matters Now)

Published by

Published on

Read time

Category

You've seen it on the product roadmap. The CTO approved the timeline, the engineers are confident, and you've allocated six to eight weeks to build a conversational AI interface that can surface inside your product, handle dynamic requests, and deploy across web, mobile, WhatsApp, Slack, and Teams.

Three months later, you've got a working chat widget on staging, but it doesn't support voice input yet. The widget system isn't finished; multi-surface deployment keeps breaking; authentication needs a complete rework; and memory compliance is still on the backlog. Your engineers haven't even started on the AI capabilities that actually make your product valuable.

This keeps happening because teams chronically underestimate what goes into an agentic frontend. Unlike traditional UIs, where you control exactly what appears and when, agentic frontends must dynamically generate interfaces across channels you don't control, respond to requests you can't predict, and handle conversational flows that branch in hundreds of directions. As a result, the timelines you're planning for end up being way off because the scope is invisible until you're deep into the build and still nowhere near done.

The hidden complexity nobody mentions upfront

When most teams estimate building conversational AI, they focus on the visible parts (the chat interface, the streaming responses, and maybe voice input if they're being thorough). And according to industry estimates, building these base elements of conversational AI takes anywhere from six to twelve months for customized systems. But that's just the beginning.

The real timeline explosion occurs when you realize how much infrastructure lies beneath the surface. You're not building a chat widget. You're building a complete conversational platform that needs to work in unpredictable and complex scenarios you may not have even known existed when you started scoping this out. Here’s just a handful of the challenges:

Conversational interface

The conversational interface itself is more than a text box and a send button. You need streaming responses that feel fluid rather than choppy, voice input that works across devices, rich media handling for images, videos, and file uploads, and citation rendering so users can trace the source of information. Each of these seems straightforward in isolation, right up until you're debugging why voice recognition fails in Safari but works perfectly in Chrome.

Widget system

Then there's the widget system. Traditional apps show fixed screens. Agentic frontends need to dynamically render interface components inside conversations based on user requests. Someone requests flight options, you surface an interactive booking widget with filters and sort options. They ask about sales performance, and you generate a chart they can manipulate. Each widget is custom React code that needs to work reliably across every surface you support.

Multi-surface deployment

Multi-surface deployment compounds all of this, because what works perfectly on your website breaks on mobile, and what renders fine on mobile doesn't display correctly in Slack. WhatsApp has completely different constraints, Teams has its own quirks, and suddenly you're not maintaining one interface but five or six, each with unique limitations and edge cases that your team has to discover and solve individually.

Authentication

Authentication is another area that becomes surprisingly complex once you dig in. You need single sign-on integration with your existing identity systems, role-based access control so different users see different capabilities, and multi-tenant architecture ensuring customer A's data never appears in customer B's conversations. Add session management across surfaces and token refresh handling, and each piece tacks weeks onto your timeline that nobody accounted for.

Memory infrastructure

Memory infrastructure is another hidden beast. You need persistent context that remembers user preferences, conversation history, and relevant documents across sessions. That memory must be GDPR compliant with right-to-deletion, data portability, and access controls. It needs to be ready for the EU AI Act with transparency logs and human oversight capabilities. Data residency controls for customers who need information stored in specific regions. Encryption at rest and in transit. Memory deletion and export on demand.

AI orchestration layer

All of the above comes before you've even touched the AI orchestration layer, which involves determining which services to call in what sequence, routing requests to the right backends, handling failures gracefully, managing rate limits, implementing fallback strategies, and monitoring performance across the whole system.

Even if you account for all of this, the timeline still breaks because agentic frontends don't follow traditional development rules. When you build a traditional interface, you plan exactly what users will see. This screen leads to that screen, this button triggers that action, and you map the entire flow in advance, build each piece, test the paths, and ship. The scope is knowable, and the timeline is predictable because everything is predetermined.

With agentic frontends, you can't predict what users will request or how they'll phrase it, so the interface must generate dynamically based on intent, context, and available capabilities. This means building systems that handle infinite variations rather than predetermined flows.

The maintenance burden that never ends

You ship the first version, it works, users are happy, and then the real work begins because foundation models evolve constantly. Claude releases a new version with different response patterns, GPT adds capabilities that change how prompts should be structured, and your carefully tuned system needs adjustment. Not a one-time update but continuous refinement as the underlying models shift beneath you.

User expectations also change faster than they do with traditional software. Someone uses ChatGPT's new feature and immediately expects it in your product, while industry standards for conversational interfaces evolve every few months. What felt cutting-edge at launch can feel dated six months later, and you're competing not just with other B2B software but with consumer AI experiences users interact with daily.

According to the State of Enterprise AI Adoption Report, only 31% of AI use cases will be in full production by 2026. A significant reason is the ongoing maintenance burden. According to McKinsey, maintenance typically represents 20% of the original development cost each year for software systems, reflecting ongoing work to manage technical debt, update dependencies, and sustain performance.

Why this timing pressure matters more than usual

Conversational interfaces aren't optional additions to your product anymore. Over 1 billion people use AI interfaces monthly. That expectation now transfers to every product users touch.

Your customers aren't comparing you to competitors who also don't have conversational AI - They're comparing you to ChatGPT. They're judging whether your interface feels as fluid as Claude. They expect to describe what they need and have your product understand and respond intelligently.

All of that means the window for differentiation is closing rapidly. According to Master of Code Global's conversational AI trends research, 84% of enterprises now use conversational AI tools to close IT gaps and speed up deployment cycles. Every company in your market is now either building conversational interfaces for customers, or using them internally. The teams that ship first capture user expectations. The ones that ship later must match or exceed that baseline just to seem competent.

According to Gartner research, 70% of new applications will use low-code or no-code technologies by 2026, up from less than 25% in 2020. That means that while you're six months into building chat infrastructure, your competitors could be six months into learning from users, refining their AI capabilities, and capturing market share. The opportunity cost compounds daily.

What actually accelerates the timeline

You can't shortcut the complexity, but you can change which complexity you tackle and which you rent, and that distinction makes all the difference.

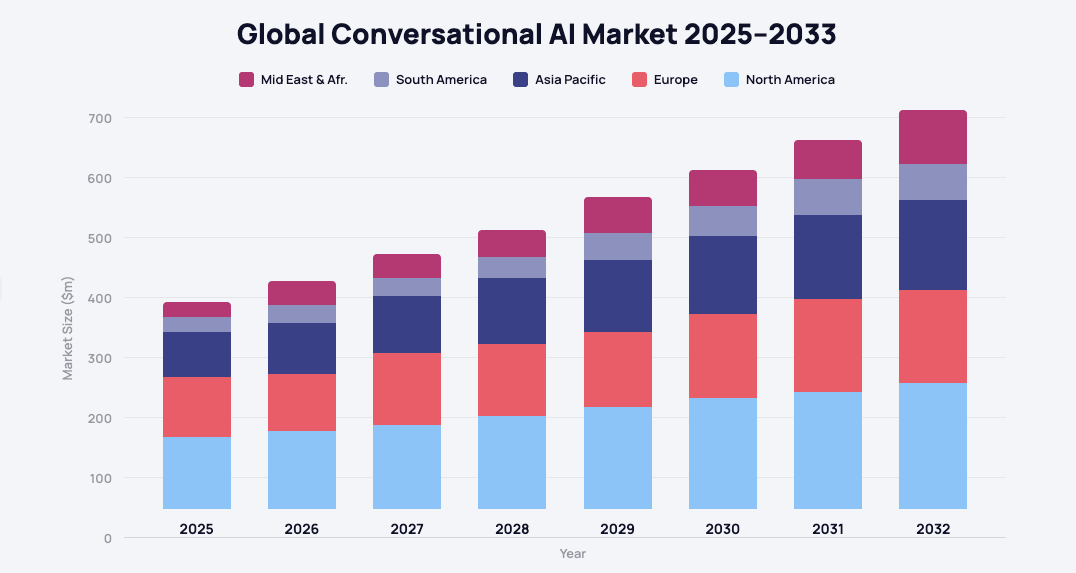

The conversational interface layer is fundamentally commodity infrastructure. Every company needs text, voice, and rich media handling. These capabilities don't differentiate your product; they're table stakes for participating in the conversational AI market— and that market is growing fast.

Think about e-commerce for a moment. Nobody builds payment processing from scratch because Stripe exists. And similarly, nobody creates shopping cart infrastructure because Shopify solved it. Instead, you focus on storefront design, product catalogue, brand experience: the things that make your e-commerce site yours, not someone else's. The agentic frontend is the same category decision, because the presentation layer needs to work flawlessly, but it's not where competitive advantage lives. Competitive advantage is in the AI capabilities you're building: the domain knowledge your agents have, the workflows they can automate, and the intelligence they bring to your specific problem space.

Platforms that handle the frontend layer let you ship conversational interfaces in weeks or months instead of quarters. This means engineering teams can focus entirely on the intelligence layer that makes your product unique. These capabilities create differentiation, while chat interfaces remain a commodity infrastructure that doesn't need to be rebuilt from scratch every time.

The decision matters now because your competitors are making the same calculation. Some will spend a year building infrastructure. Others will ship in weeks by focusing on what differentiates them. The teams winning in this market are the ones who understood where to focus their engineering talent and moved fast.

Book a demo today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpeg)