Why Protocol Based AI Infrastructure Is Replacing Custom Integrations

Published by

Published on

Read time

Category

You’ve been here before. Your team spins up a proof of concept, connects an LLM to a data source, builds a decent interface… And it works! Then someone asks you to connect a second data source, followed by a third. Suddenly, your AI infrastructure is 80% integration code and 20% actual intelligence.

This is the problem Anthropic described when they released MCP. For every new data source and every AI system, you need a separate integration. Five data sources and five AI systems means twenty five custom connectors, each with its own auth handling, error states, and maintenance overhead.

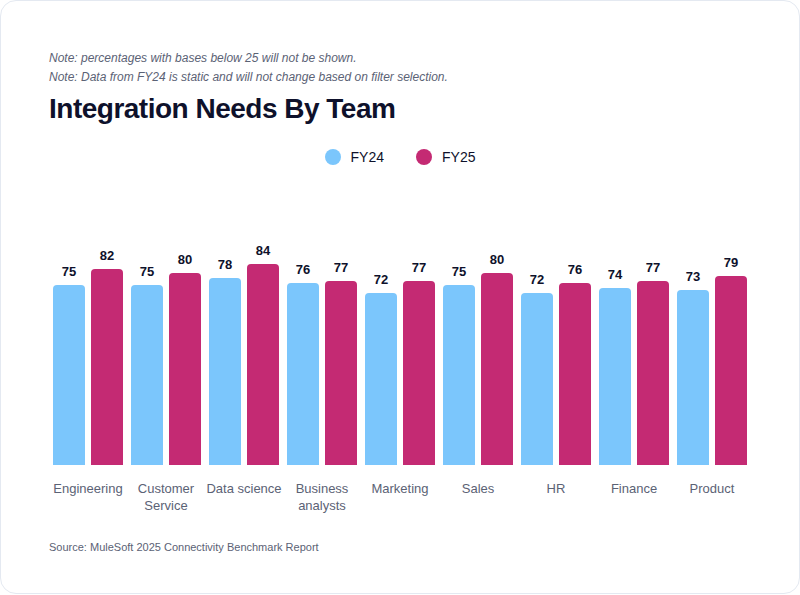

The numbers are worse than most teams expect. According to MuleSoft’s 2025 Connectivity Benchmark Report, the average organisation runs 897 applications. Only 29% are integrated, and just 2% of businesses have connected more than half their stack. Custom integration doesn’t scale - it never did. AI just made the problem impossible to ignore.

How MCP is reshaping AI infrastructure

The Model Context Protocol shipped in November 2024 and moved from experiment to industry standard faster than most protocols manage. OpenAI adopted it across its products in March 2025; Google DeepMind followed shortly after; and Microsoft integrated it into Azure OpenAI — all before the Linux Foundation's Agentic AI Foundation took over governance of the spec.

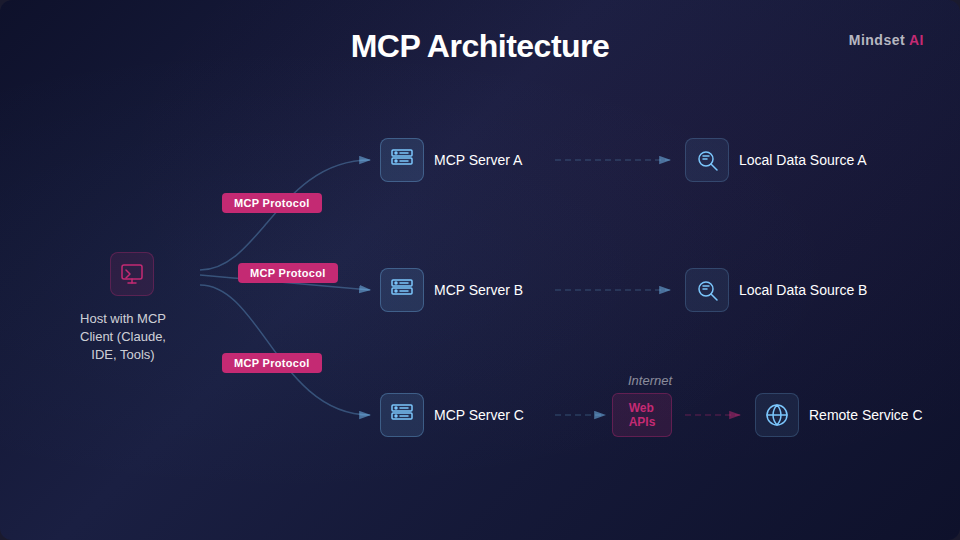

For engineers, the value proposition is straightforward: you build one MCP server that exposes your platform's capabilities through a standardised interface, and that server works with any AI system supporting the protocol. One integration, every client.

The architecture is simple by design. An MCP server is a lightweight process that exposes tools, resources, and prompts over a JSON-RPC transport. Clients discover capabilities at runtime through the protocol’s built-in capability negotiation: no SDK lock-in, no proprietary middleware. The MCP Registry launched in preview in September 2025, and major platforms like Notion, Stripe, GitHub, and Postman have already published servers.

What makes this different from previous “universal connector” attempts is the protocol’s design around AI-native patterns. MCP servers don’t just expose CRUD endpoints. They expose tools with natural language descriptions that AI models can reason about, resources that provide structured context, and prompts that encode domain-specific workflows. A client connecting to your server automatically understands what it can do without hardcoded schemas or manual configuration.

The implementation barrier is low. The official TypeScript and Python SDKs handle protocol negotiation, message framing, and transport. A typical MCP server for an internal service can go from zero to functional in a day. The protocol also supports multi-tenant isolation out of the box, which is important when running production workloads across different customer environments.

The cost of custom AI infrastructure

Custom API integrations are expensive. Estimates range from $2,000 to $30,000 per integration, with annual maintenance hitting $150,000 per connector. But the real cost is opportunity cost. Every sprint your team spends building plumbing is a sprint they’re not building the capabilities that differentiate your product.

And the pace is only accelerating. Gartner predicts 40% of enterprise applications will include task-specific AI agents by the end of 2026, up from under 5% in 2025. You can’t ship agents at that pace if every integration is bespoke. A single agent might need access to your CRM, your ticketing system, your knowledge base, and your calendar, all in one workflow. With custom connectors, that’s four separate integrations to build, test, and maintain per agent. With MCP, it’s four servers that any agent can use.

Portability: the agentic AI platform lock-in question

Every technical buyer asks about vendor lock-in when evaluating AI infrastructure. It’s the right question. One healthcare network discovered that switching cloud providers would cost $8.5 million due to proprietary dependencies. Protocol-based infrastructure changes this equation.

MCP is an open standard with a public spec and open-source SDKs. If a better model launches tomorrow, your servers can work with it immediately. Your investment goes into building standard servers, not proprietary connectors tied to a single vendor’s ecosystem.

Forrester predicts 30% of enterprise app vendors will launch their own MCP servers this year. Databricks and Snowflake already have native MCP support. Cloudflare supports MCP server deployment. And MCP isn’t the only initiative. Cisco, LangChain, LlamaIndex, and others launched AGNTCY, an open-source project to establish interoperability standards for agents. The Financial Services Institute released a white paper featuring a four-stage interoperability maturity model. It’s clear then that the industry is converging on the idea that agents need shared protocols to work across systems, and MCP is the most adopted implementation of that idea.

Why governance gets simpler with protocol-based AI infrastructure

2025 was the year teams ran AI pilots. 2026 is the year those pilots need to run in production, with real users, real data, and real compliance requirements. That shift exposes every shortcut in your integration architecture.

Custom integrations create governance headaches. Each connector needs separate security review. Each connection point is a potential compliance risk. When you have dozens of integrations, tracking data flows becomes nearly impossible.

Protocol-based AI infrastructure centralises these concerns. MCP includes standardised approaches to authentication, authorisation, and audit logging. Your security team reviews the protocol implementation once, not every individual integration. As your agentic AI platform capabilities grow, your security posture scales with them instead of fragmenting.

The practical migration path

If your team is running custom integrations today, you don’t need to rebuild everything at once. The pragmatic approach is incremental.

Start with your next integration project. Instead of writing custom connector code, implement an MCP server. Run it alongside your existing integration and validate parity. The MCP SDKs include testing frameworks, so you can write integration tests that compare outputs between your legacy connector and the new server before cutting over.

Prioritise migrations by maintenance burden. The connectors that break most often, require the most patching when upstream APIs change, or create the biggest bottlenecks, should move first. Each migration reduces technical debt and frees up engineering capacity.

For teams already running API-led architectures, the path is even shorter. If you’ve invested in well-structured REST APIs, wrapping them in MCP servers is mostly a mapping exercise. Your existing API handles the business logic. The MCP server handles discovery, context, and protocol negotiation. You’re not rewriting your stack. You’re adding a standard interface layer on top of it.

And here's the thing: The calendar integration, the CRM connector, and the analytics pipeline... none of these are competitive advantages. They’re commodity AI infrastructure that every company builds identically. The teams shipping fastest right now aren’t writing the most custom code. They’re the ones who chose the right infrastructure layer, freed up their engineers, and pointed that capacity at features their users actually care about.

Book a demo today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.jpeg)